Column-oriented Database Systems

Contact: Daniel Bößwetter

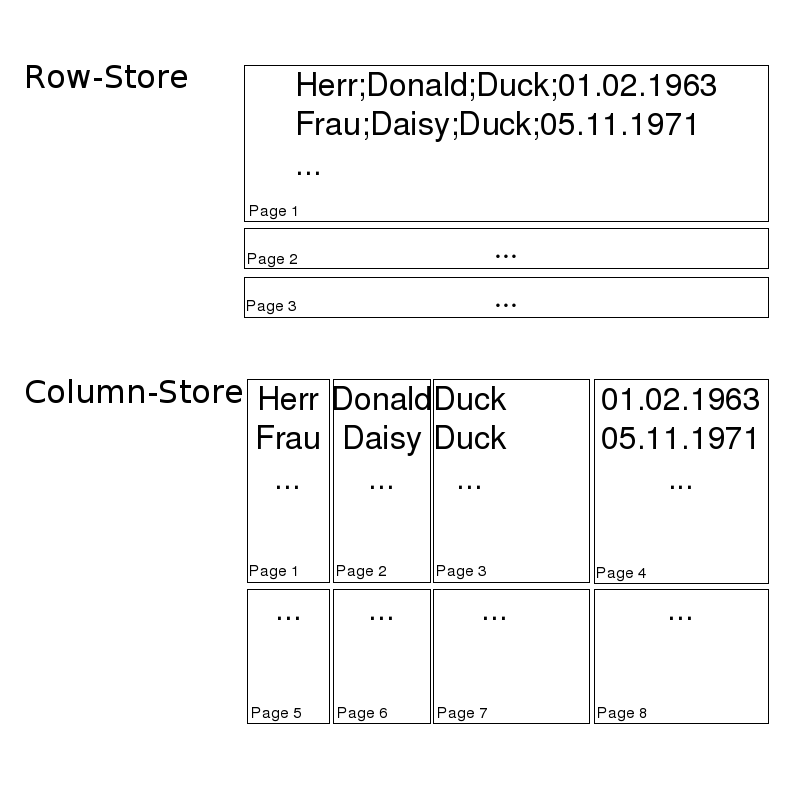

In recent years we have seen the emergence of several “column-oriented” relational databases in academia and industry. These are expected to increase the speed of read-mostly analytical workloads (e.g. in decision support systems), which usually require only a subset of attributes of a relation, but may involve a large percentage of a relation's tuples. The advantages of storing relations column-wise (instead of row-wise) are:

- Only the attributes required for a particular query must cross the boundary between layers of the memory hierarchy (e.g. from disk to RAM to the CPU-cache), resulting in less data to transfer and better cache efficiency on several layers.

- Higher potential for data compression, since only values from a single domain are stored consecutively, sometimes even self-sorted. This allows for light-weight compression techniques like delta compression and run-length-encoding which can be operated upon without prior decompression.

However, there is an ongoing dispute among researchers about the real benefit of column-orientation over its disadvantages:

- The architecture of the DBMS must be changed radically, thus putting at risk the investments of decades of huge database vendors. E.g. prior research has shown that a tuple-by-tuple execution as done by row-based DBMS is inferior with respect to throughput when compared to a block-by-block approach. Moreover, the concept of early-projection known from traditional RDBMS becomes a late-materialization in column-stores, giving rise to changes in the optimizer.

- From a naïve point of view, the reconstruction of individual tuples (or parts thereof) can be seen as a join of columns contained in the logical relation, which can become very expensive when many columns are affected. Only when a large percentage of tuples is reconstructed, “stitching” tuples together can be an efficient alternative.

- Decreased write-performance, because inserts and update now require one page per attribute to be forced to stable media instead of one page per tuple. This is usually circumvented with “nightly batch-imports” or hybrid architectures (e.g. in C-Store).

In this project, we try to combine the best of both worlds into a system with the following goals:

- Read-performance near that of a column-store with a focus on tuple-reconstruction.

- Write-performance with a predictable upper-bound, although it may be slower than a OLTP-optimized system.

Part of our consideration will be new trends in hardware development like Flash memory and Multicore architectures, both of which influence the way databases are built.